What are ETL processes? - Definition, advantages & purposes

Have you ever wondered how large companies manage to bring together mountains of data from different sources, cleanse it and then use it to make important decisions? It's not rocket science, but a meticulous method that forms the backbone of any modern data analysis.

In today's digital world, data is your most important asset. But data lying unstructured in different systems is like rough diamonds - valuable but useless until it is polished. This is where the ETL process comes into play. The full name of ETL is 'Extract, Transform, Load' and describes the three central steps with which raw data is prepared for data warehouses, data lakes or business intelligence analyses.

Extract, Transform, Load- these three steps are the key to transforming your raw data into meaningful information. The term 'ETL' is often used in the context of data integration and data migration and differs from other terms such as ELT (Extract, Load, Transform) and DWH (Data Warehouse), which each have their own focus and areas of application. In this article, we want to show you what ETL really means, why it is essential for your company and how we can optimize your data projects together.

Definition: What is the ETL process?

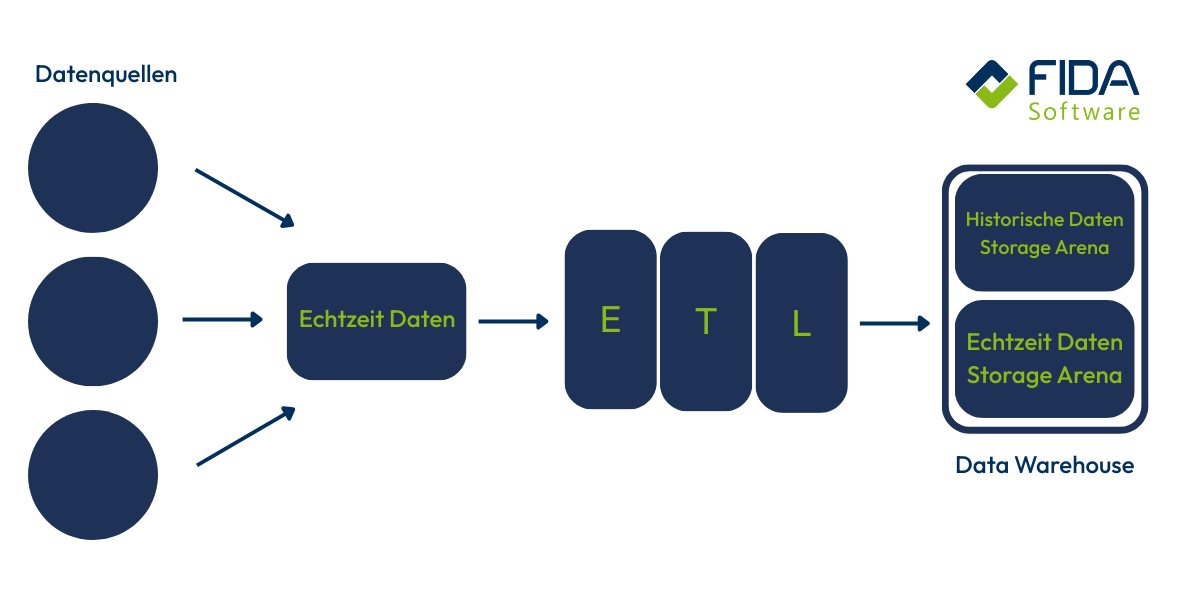

ETL is the technical method used in IT to efficiently integrate and standardize data from heterogeneous sources and make it available for analysis in a central target system. It is your tool for mastering the complexity of the data landscape. The use of an ETL process is necessary because company-relevant data comes from different internal and external sources.

When we talk about managing and analyzing large amounts of data, you will inevitably come across the ETL process. This process forms the foundation for every data warehouse and every BI solution. The ETL process consists of several clearly defined individual steps that run in sequence and structure the data preparation.

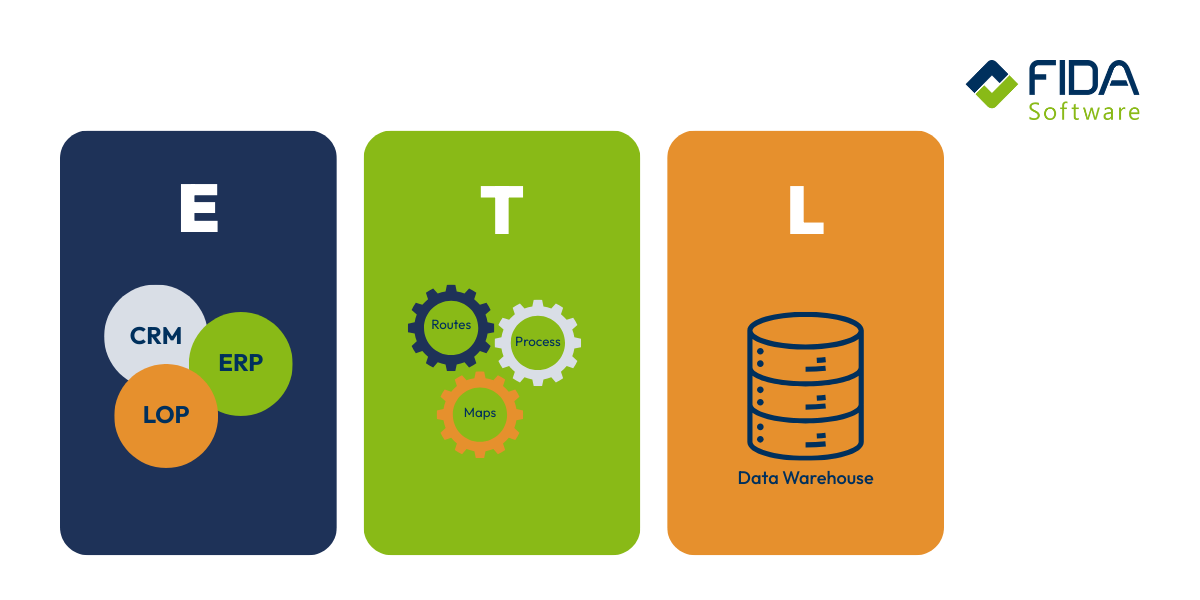

Your company-relevant data often comes from a wide variety of internal (e.g. ERP, CRM) and external sources (e.g. market data, social media). To make this data usable as information, the following three successive steps are necessary:

Extract / Extraction (data procurement): In this phase, the raw data is extracted from the various source systems.

Transform (data refinement): This is the crucial step in which the extracted data is transformed. The data transformation includes the standardization, conversion and cleansing of the raw data.

Load (data provision): In the final step, the fully prepared and structured data is transferred to the central target system - usually the data warehouse - where it is made accessible for your analytical applications.

These three steps ensure that you always have a consistent, high-quality and complete database available for your analyses. ETL processes ensure that consistent and cleansed data reaches your data warehouse.

Why are ETL processes worthwhile?

Why should you make the effort to implement a structured ETL process? It's simple: ETL processes are used in various industries and business areas to organize data from different areas and optimize processes. They help organizations of all sizes to use resources more efficiently and enable the smooth migration of data between systems. They provide you with the necessary foundation to make data-driven decisions quickly and confidently.

Here are some of the key benefits you gain:

Higher data quality and consistency: the mandatory transformation phase means you clean up incorrect or inconsistent data even before it is loaded. This means that the data you analyze is always reliable and standardized. You can rely on the results.

Well-founded decisions: Only the central consolidation of all relevant information in your data warehouse gives you a holistic view of your business. You can see correlations, recognize trends and make decisions based on data rather than on instinct.

Time-saving analysis: As the data is already optimized and structured in the target system, your analysts and decision-makers no longer have to perform time-consuming manual preparation. They can start the actual analysis immediately and concentrate on adding value.

Compliance and auditability: The ETL process creates a clear history of how data was transferred from the source to the target system. This ensures a high level of traceability (data lineage) and helps you to comply more easily with legal requirements and internal data management guidelines.

Efficient system utilization: Extraction and transformation take place outside the operational source systems. This avoids performance losses in your critical business applications while the data is being prepared for analysis.

Complexity and error-prone queries: If your current methods of querying and merging data are too inefficient, error-prone or even impossible, ETL provides you with the necessary structure.

Centralization of analysis: You want to create a central instance for all your data analyses - your data warehouse. ETL ensures that all data is standardized and transported there.

Big data and heterogeneous formats: The ETL approach is also relevant when opening up and evaluating very large volumes of data (Big Data) from a wide variety of formats, as it provides the necessary standardization.

There is no limit to company size: ETL is not just for large corporations. This structured approach is also very important for small and medium-sized enterprises (SMEs), whose data volumes and market requirements are growing.

You also use ETL processes for technical tasks such as data migration between different applications or for data replication for backup and archiving purposes.

The three core processes in detail: E, T and L

In order to master the complexity of your data management, a deep understanding of the three phases of the ETL process is essential.

Step 1: Extract (obtaining the source data)

Extraction is the initial phase in which you pull the raw data from various operational source systems (such as ERP, CRM, databases, sensor data). The biggest challenge here is the heterogeneity of the data sources, as they use different formats, technologies and data models. Modern data services make it possible to extract and provide data in real time, which is essential for current ETL processes and data integration solutions. Cloud platforms such as AWS offer powerful services for data extraction, transformation and loading, especially when integrating into data warehouse environments.

You need to decide which extraction strategy best suits your systems and requirements:

Full Extraction (Static): When first populating your data warehouse or restoring a system, full extraction is necessary. It creates a comprehensive image of the source, but is resource-intensive and time-consuming.

Delta extraction (incremental): Delta extraction is more efficient for ongoing operation. You only read the data records that have changed since the last run. This is often done via timestamp fields or transaction logs (Change Data Capture) of the source systems.

Transfer type: You must also specify whether the extraction is synchronous (immediate processing, high network load) or asynchronous (delayed processing, more resource-efficient). Extraction can be synchronous or asynchronous, with synchronous extraction continuously updating the datasets. The choice directly influences how up-to-date your analyses will be.

The result of this phase is the raw data in the staging area, a cache that serves as the basis for the transformation. Efficient data storage in the staging area and in cloud environments is crucial in order to create a solid foundation for further data processing and analysis.

Step 2: Transform (refinement and cleansing)

The transformation phase is crucial to quality. Its task is to bring the heterogeneous raw data into the standardized format and consistent structure that your data warehouse requires. The transformation process brings the extracted data into a standardized format that can be used within the company. Without this step, you would be basing evaluations on incorrect or contradictory information.

In the transformation step, you perform several critical tasks, which are collectively referred to as data cleansing:

Cleaning: You identify and correct data errors (e.g. typos, missing values, invalid formats). You have to decide whether to delete or correct incorrect data records or add the missing values.

Standardization and normalization: You ensure that data fields (e.g. addresses, date values) are displayed uniformly across all sources in order to guarantee semantic consistency.

Aggregation and derivation: You summarize data (e.g. monthly turnover instead of individual transactions) or derive new metrics that are important for your analyses but do not exist in the source systems.

Linking and enrichment: You merge data from different sources (e.g. customer ID from the CRM with order data from the ERP) and enrich data records with additional, relevant information.

Data profiling: Data profiling is used as an automated method to analyze and evaluate data quality during the transformation process.

The aim is to eliminate anomalies (incorrect or redundant data records) so that you end up with reliable information for your reports.

Step 3: Load (the final deployment)

In the final step, you transfer the now fully cleansed and structured data from the staging area to your target system, usually the data warehouse (DWH) or an operational data store (ODS).

Here too, there are various strategies, depending on the requirements for up-to-dateness and system availability:

Initial load: The initial loading of the DWH with the complete dataset.

Incremental load: The regular addition or updating of the delta data determined in the extraction phase.

Verification and integrity: Integrity checks must take place during the loading process to ensure that the data is correctly inserted into the target table.

Locking times may be necessary, especially for large amounts of data. These prevent analysts or BI systems from accessing the data while the loading process is still running, thus ensuring data integrity. Only when the process is successfully completed can you ensure that all queries are based on the most up-to-date and consistent information.

Are you looking for support in implementing or optimizing an ETL process? Then get in touch with us!

What requirements does an ETL process place on your infrastructure?

The successful implementation and operation of an ETL process places clear demands on your IT infrastructure. You need powerful components to ensure that data processing runs quickly and reliably.

Here are the central infrastructure components that you need to provide:

The ETL server (processing instance): The transformation is computationally intensive. You therefore need a dedicated server or a virtual machine with sufficient CPU power and RAM. This server executes the actual ETL tools and manages the complex logic for data cleansing and structuring. High computing power is required to complete the processes within an acceptable time window.

The staging area: As already mentioned, the extracted raw data requires a temporary buffer before it is transformed. This staging area requires fast storage space with high I/O performance (input/output), as large amounts of data need to be read and written quickly. A high-performance database or file storage is often used for this purpose.

The target system (data warehouse): The data warehouse (DWH) or operational data store (ODS) is your final target. This system must be extremely scalable and optimized for analytical queries. Database technology plays a crucial role here, as it must store the large volumes of structured data efficiently and deliver fast response times for your business intelligence (BI) tools.

Network capacity: During the extraction and loading phases, very large volumes of data are often moved across the network - from the source systems to the ETL server and from the ETL server to the DWH. Sufficient bandwidth and a stable network are crucial to avoid bottlenecks and long runtimes.

Monitoring and maintenance: You need robust tools to monitor the ETL process. This includes scheduling and logging functions to ensure that processes start on time, errors are logged and you can react quickly in the event of failures.

By taking these requirements into account and correctly dimensioning the infrastructure, you create the basis for an efficient and reliable data flow in your company.

ETL vs. ELT what is the difference?

In the modern data world, an important alternative has emerged alongside the classic ETL approach: ELT(Extract, Load, Transform). While in the ETL process the transformation takes place before loading into the target system, the ELT process differs in that the data is first loaded completely into the target system and only then transformed. Although the same steps are involved, the changed sequence leads to a fundamentally different approach to data integration.

With classic ETL, you extract the data (Extract), refine it on a separate ETL server (Transform) and only then load the finished, cleansed data into your data warehouse (Load). This is where the computationally intensive transformation takes place outside the target system. This often makes sense with older database technologies or if you only want to store the absolutely necessary, lean data records.

The ELT approach reverses the last two steps. Here, the raw data is first completely loaded into the data warehouse after extraction (Load). The transformation (T) then takes place within the target system, typically using the high computing power of modern cloud data warehouses (such as Snowflake or BigQuery). The ELT process therefore enables faster data provision, as the transformation only takes place after loading.

The major advantage of the ELT approach for you lies in its flexibility and raw data storage. As all data is immediately available in the DWH, you can carry out transformations at any time and multiple times with different logics. You don't need a dedicated ETL server because you use the computing power of your data warehouse. If you opt for ELT, this is usually an indicator that you are working with very large amounts of data (big data) and want to take advantage of cloud scalability.

Relevant software: tools for your ETL process

To implement ETL and ELT processes efficiently, you need the right software. The tool landscape is diverse and can be roughly divided into three categories:

Cloud-native & hyperscaler tools (ELT-focused)

These tools are often tightly integrated into cloud platforms and are ideally suited to the modern ELT approach as they utilize the computing power of the cloud:

Azure Data Factory (ADF): Microsoft Azure's central integration tool. Ideal if you already use the Azure Cloud.

Google Cloud Dataflow: A fully managed service from Google that is particularly suitable for complex, streaming and batch data processing.

AWS Glue: The serverless ETL service from Amazon Web Services that can automatically recognize metadata (schemas).

Traditional ETL platforms

These established tools offer very extensive functions, high scalability and are often used in complex enterprise environments:

Informatica PowerCenter: One of the market leaders, known for its stability and extensive connectivity.

IBM DataStage: A powerful platform that is particularly suitable for large data volumes and high data quality requirements.

Open source solutions

If you are looking for a cost-effective and flexible alternative, open source tools are a good option that you can customize yourself:

Apache Nifi: An easy-to-use, powerful and reliable system for automating the flow of data between software systems.

Choosing the right tool depends heavily on your existing infrastructure, your budget and the complexity of your data integration requirements.

Conclusion: Your key to data sovereignty

The ETL process is far more than just a technical acronym; it is the foundation on which you build your entire data strategy. Whether you choose the classic ETL route or prefer the modern flexibility of ELT, the goal remains the same: to transform raw, distributed data into a consistent, high-quality information base that allows you to make faster, more informed business decisions.

However, choosing and correctly implementing the right approach is complex and depends on your specific infrastructure and requirements. This is exactly where we are at your side.

As your partner for data solutions, we offer you comprehensive expertise: we analyse your data landscape, advise you on the choice between ETL and ELT and take over the professional implementation and optimization of your ETL processes. We guarantee you a stable, high-performance data flow so that you can concentrate fully on analysis and increasing your business success.

Talk to us - together we will bring order and value to your data.

FAQ Frequently asked questions about the ETL process

The term data integration is the generic term and describes the overall goal, namely the merging of data from different sources. The ETL process (Extract, Transform, Load) is one of the central methods or techniques used to achieve this goal. Data integration can also include other techniques such as virtualization or ELT.

A data warehouse is a central database system that has been specially optimized for analytical purposes. It stores historical and consolidated data. The ETL process plays the main role in building and maintaining the DWH by extracting the raw data from operational systems, cleansing it and loading it into the DWH. The DWH is therefore the primary target system of the ETL process.

The frequency of execution depends on how up-to-date your data needs to be:

Batch processing: For daily, weekly or monthly reporting, the process runs at fixed time intervals (batches).

Near real-time: If you require faster information, the process is carried out more frequently (e.g. every 5 minutes) or the ELT approach with streaming technologies is used to provide the data almost immediately.

A faulty ETL process can have serious consequences. The main problem is poor data quality in your data warehouse. This leads to you making decisions based on incorrect or incomplete information. Inefficient processes can also lead to unnecessarily high infrastructure costs or overload your operational source systems.

Data lineage describes the life cycle of a data set, from its creation in the source through all transformation steps to its final storage in the data warehouse. A clearly documented ETL process guarantees the data lineage and makes the origin of your data traceable at all times - an important aspect for audits and compliance.