Deep fakes, fake news, what is still real? How can I recognize fake pictures?

In a world in which a photo no longer shows what really happened - but what someone wants you to believe - reality and falsification are becoming increasingly blurred. The targeted dissemination of misinformation in the form of manipulated images is making it increasingly difficult to perceive the truth. Fake images are now circulating in all the news, on platforms and in social networks and it is becoming increasingly difficult to recognize the truth in this web of information. With modern AI tools and image editing programs, images can now be manipulated with just a few clicks - and this can have serious consequences.

The effects: Fake images influence all areas of digital communication and can permanently shake trust in the media.

Whether it's fake damage images for insurance companies, AI-generated photos of alleged relatives in perfidious scams or deceptively real deepfakes on social media - visual manipulation is no longer an exception, but a real threat. The spread of fake images on various platforms and networks is increasingly undermining the credibility of news.

This raises the question: How do you recognize what is real? And how can you protect yourself from the growing danger of fake images? In view of the flood of manipulated images on the internet, it is becoming increasingly difficult to keep an overview and reliably recognize fakes.

In this blog post, you will find out why fake images are so dangerous, what you should look out for - and how our software helps companies to make the truth behind an image visible.

How can you tell if an image has been manipulated?

Today, manipulated images are a key tool in modern disinformation campaigns - and unfortunately they are often difficult to recognize. Some forgeries may immediately catch your eye, others appear completely genuine at first glance. This makes it all the more important that you learn to pay attention to the fine details that don't match.

Analyzing features such as patterns, textures and lighting conditions plays a crucial role in detecting image manipulation, as inconsistencies in these areas often indicate fakes.

What should you pay particular attention to in pictures?

Inconsistent light and shadow conditions: Take a close look! Do the shadows look logical? Is the light coming from a realistic direction? Pay particular attention to whether the face and its shadows look natural - inappropriate shadows on the face can be a clear sign of image editing.

Errors in the background: Distorted structures, duplicate objects or strangely blurred areas in the background can be indications of AI-generated or subsequently altered content.

Images taken out of context: Not every fake image is manipulated - some shots are real but tell a false story. Images of past events are often disseminated as current sensations in order to deliberately deceive or stir up emotions.

Manipulative cropping: If an image is heavily cropped, crucial information may be missing. An altered image section can be deliberately used to conceal important details and thus create a deception. What is deliberately left out can be just as manipulative as what you see.

Conspicuous or incorrect text fragments: Look out for illogical, misspelled or distorted text fragments in the image. Such text fragments can be identified by automatic text recognition (OCR) and are often an indication of manipulation or AI-generated content.

Even if a single clue does not provide proof, a clear overall picture often emerges when several of these warning signals come together. A critical look at light, perspective, details, text and context can help you to expose many fakes - or at least raise reasonable doubts.

Also note: even a screenshot can be manipulated. Therefore, check screenshots of transactions or documents particularly critically, as they can easily be faked and are not always suitable as evidence.

However, in an age where AI can create deceptively real images, the human eye is often no longer enough. This is where technical solutions come into play - so that you no longer have to decide alone what is genuine and what is not.

Dieser Schaden wurde fingiert.

How do I recognize images created by AI?

Traditional image editing is not the only problem - artificial intelligence and modern technologies are also increasingly being used to create or modify images. AI tools can be used on a computer, smartphone or cell phone to check images and videos for manipulations, metadata and information directly in the browser.

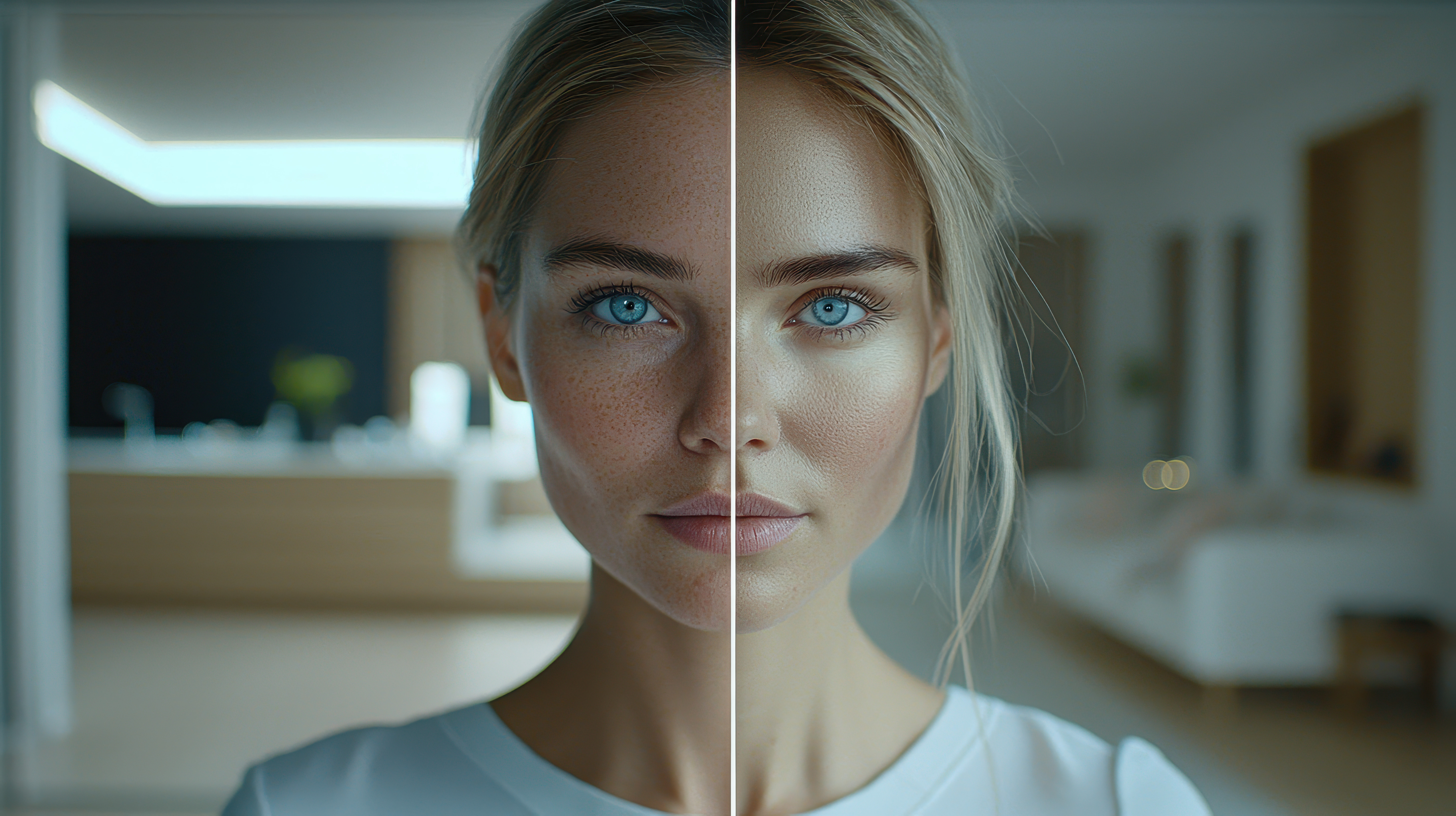

The result: deceptively real deepfakes. Tools such as Midjourney, DALL E or similar software can create images in seconds that look as if they have been photographed for real.

But there are typical signs that you can look out for to unmask AI images.

Signs that indicate AI-generated images

Illogical details in the image: AI models are based on huge amounts of data - if these are incomplete or incorrect, strange elements are created. Look out for twisted fingers, incorrectly positioned ears, blurred objects or distorted backgrounds. A well-known example is an AI-generated viral image that depicts a down jacket in an unusual way.

Unnatural shadows and light sources: Especially with faces, it is noticeable that light and shadows are often not realistic. Look closely at the areas around the eyes, under the brows or along the nose. If the shadows are not correct, this is a strong indication of a deepfake.

Reflections in glasses or lenses: Is the person pictured wearing glasses? Then it is worth taking a look at the reflections. If they are completely absent or appear illogical, caution is advised.

Skin that is too perfect: Many AI-generated portraits show flawless, almost plastic-like skin without pores or natural imperfections. Although this may appear beautiful at first glance, it is often an indication of artificial origin.

Conspicuous texts or text fragments: AI-generated images often contain incorrect or distorted texts. The analysis of texts and text content in AI images, for example using OCR technologies, can help to detect forgeries.

Despite all the tips: Deepfakes are getting better and more realistic. Even trained eyes reach their limits. Deepfakes, in which faces in videos are manipulated or replaced by AI technologies, are particularly difficult to detect.

That's why there are now specialized tools that you can use to technically check images. The accuracy of these tools is crucial for reliably identifying manipulated images and videos.

How can you check or trace a picture?

Have you seen a picture and are unsure whether it is genuine or where it comes from? Fortunately, there are easy ways for you as a private individual to check a picture or trace its origin.

Here's what you can do:

Use Google reverse search: Go to Google Images, click on the camera icon and upload the image - or paste the image link if the image is online. By right-clicking on the image in the browser, you can also copy the image address or call up further options. The search engine shows you on which websites the image has already been used - including publication date and context.

Use Tineye or other image search services: In addition to Google, there are specialized services such as TinEye, which pay particular attention to visual similarity and can find alternative versions or older publications of an image.

Read metadata: If you have the image file on your device, you can try to read out the so-called EXIF data. This often contains information about when the picture was taken, the camera or the software used. Free tools such as ExifTool or Metapicz can help you with this. Note: However, the metadata has been removed from many images on the internet.

Check the social media context: If the image is shared via WhatsApp, Telegram or Instagram, for example, it is worth taking a look at the comments, timestamps or original accounts. You will often find clues as to when and why the image was first shared.

These steps can help you to expose fakes, question manipulated content and avoid falling for targeted disinformation. Especially in times of AI and deepfakes, a healthy dose of doubt is an important protective mechanism.

How can companies and organizations protect themselves against fraud through fake images?

Companies are increasingly faced with the challenge of protecting themselves against image manipulation and fraudulent deepfakes, which can cause financial and reputational damage. They are often confronted with forged documents and manipulated content on various platforms.

Many are faced with the challenge that they have neither the time nor the human resources to use and evaluate a variety of different tools. Different applications often involve complex processes, making it difficult to use them efficiently in day-to-day business. This is why companies are increasingly reliant on a central verification method that bundles and automates various analysis procedures.

This is exactly where fraudify comes in: our image forensics solution uses highly developed AI to reliably detect manipulated images.

In doing so, fraudify analyzes various image features such as image noise, metadata, blurring and transitions between image areas to find indications of retouching, montage or AI-based manipulation. Checking sources and analyzing all features and content is crucial to detecting fraud and validating the authenticity of documents and images.

This makes it possible, for example, to detect manipulated damage images in the insurance industry, where fraudsters use fake photos to obtain higher compensation. But fraudify also protects companies in other industries from fake images that are used as falsified evidence or to deceive in fraudulent scenarios.

Through the automated and traceable analysis of images, fraudify provides a reliable basis on which companies can make decisions. Combined with targeted training and sensitization of employees, this technology significantly increases protection against deepfake attacks and helps to detect and ward off fraud attempts at an early stage. You can find out more about the dangers of image manipulation here.

In this way, fraudify provides companies with an important tool to protect themselves effectively in an age of increasingly sophisticated digital manipulation.

Conclusion

At a time when image manipulation through AI and digital tools is becoming ever easier and more sophisticated, protection against fraud and deception is essential for companies. Checking all content and sources is crucial in order to detect manipulation at an early stage and ensure the authenticity of documents and images.

Companies should use tools to make the verification of content, sources and metadata efficient. Instead of having to deal with a multitude of individual tools, however, companies need a central, reliable solution such as fraudify, which combines and automatically carries out all relevant verification procedures.

This allows manipulated images and altered content to be detected quickly, risks to be minimized and decisions to be made on a secure basis.

Only with intelligent image forensics are you really equipped to prevent fraudsters from gaining a foothold and effectively protect your company. Ultimately, it is essential to check everything - from the content to the sources to the platforms - in order to reliably expose manipulation and fakes.

FAQ - How can I recognize fake images?

Look out for illogical light and shadow ratios, distorted or duplicate objects in the background, strangely blurred areas and unusual proportions (e.g. twisted fingers or unnaturally placed ears). Several such anomalies together strongly suggest manipulation.

Typical indications are overly perfect, "plastic-like" skin without pores, missing or illogical reflections (e.g. in spectacle lenses), distorted or incorrect text fragments and strange details in small image elements. Such artifacts occur when the AI model combines information incorrectly.

Use Google Images' reverse image search or specialized services such as TinEye to find identical or older versions of the image on the web. This can often reveal the original context or the first publication date.

EXIF data (recording time, camera, software) can provide clues, but is often removed or altered. If metadata is missing or implausible, this can be an indication - but is not the only proof. Tools such as ExifTool or Metapicz can help you read the data.

Automated tests analyze image noise, transitions (splicing), blurring, light consistency and metadata as well as OCR analyses for text errors. Combinations of these tests provide reliable indications of retouching, editing or AI generation.

Increasingly rare - especially with high-quality deepfakes, the human eye is often no longer sufficient. Small discrepancies may help, but technical checks or specialized tools are necessary for reliable results.

Check briefly: Trace the source (reverse image search), read the context, check the metadata (if possible) and look out for conspicuous details. For important or sensitive content, it is better to verify several sources before distributing the image.

Companies should introduce central inspection procedures using software such as fraudify, train employees and rely on automated image forensics solutions that bundle various inspection procedures and evaluate them in a scalable manner (e.g. to detect falsified damage images in insurance claims).

Context is crucial - a genuine photo can be used misleadingly if the date, location or accompanying text are manipulated. For this reason, research into where and when the image was first published is always part of the check.

Yes - solutions such as fraudify, which automate various analyses (noise and splicing checks, metadata, OCR, source checks) and log them in a traceable manner, are particularly useful for companies because they check large volumes of images efficiently and detect cases of fraud in a documented manner.